Okay WDD readers, this one is going to get real conceptual. I’m here to talk about leaving two-dimensional user interfaces behind. Ditching the screen. Going 3D in the real world. Well, you know what I mean. You know, the stuff of science fiction that always ends up looking sort of fun, but really tiring and impractical. And that’s before the holo-deck malfunctions and Picard starts shooting the Borg with hard light.

Okay WDD readers, this one is going to get real conceptual. I’m here to talk about leaving two-dimensional user interfaces behind. Ditching the screen. Going 3D in the real world. Well, you know what I mean. You know, the stuff of science fiction that always ends up looking sort of fun, but really tiring and impractical. And that’s before the holo-deck malfunctions and Picard starts shooting the Borg with hard light.

Anyway, everything I’m writing about here has little to no practical application outside of VR… for now. Most of this won’t be applicable to web design for a little while. We’ll get there. And in the meantime, it’s fun to speculate on what it will look like…

The Challenges

Display

We don’t have true 3D user interfaces right now, because the tech just isn’t there yet. It’s getting there, but we don’t have anything commercially ready for UI designers to obsess over. Holograms are getting better, and easier to produce. Motion input is also getting better and better, but it’s not as precise as we’d like it to be, yet.

This is because holograms usually require multiple sources of light. The ones that don’t use reflective surfaces to simulate those sources. Both of these restrictions tend to work against the free form physical interactions people want out of a hologram. Basically, we’re waiting until we can form holograms from particles that are easier to manipulate.

The closest we can get right now is VR. But, well, it’s virtual. It’s a simulation of a 3D interface on a pair of flat screens. So it only half counts. That said, VR will probably end up being our 3D UI mockup tool of the future.

Input

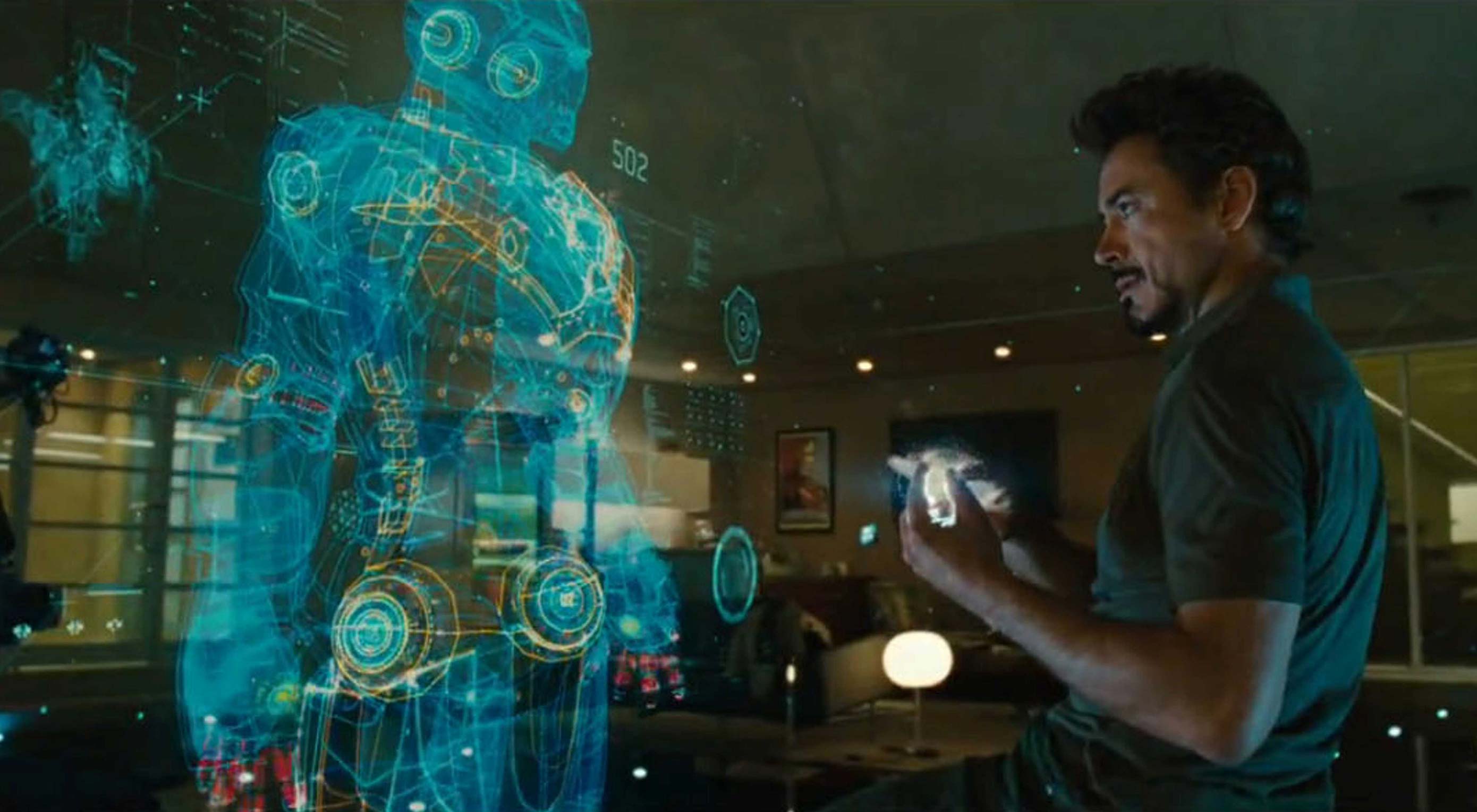

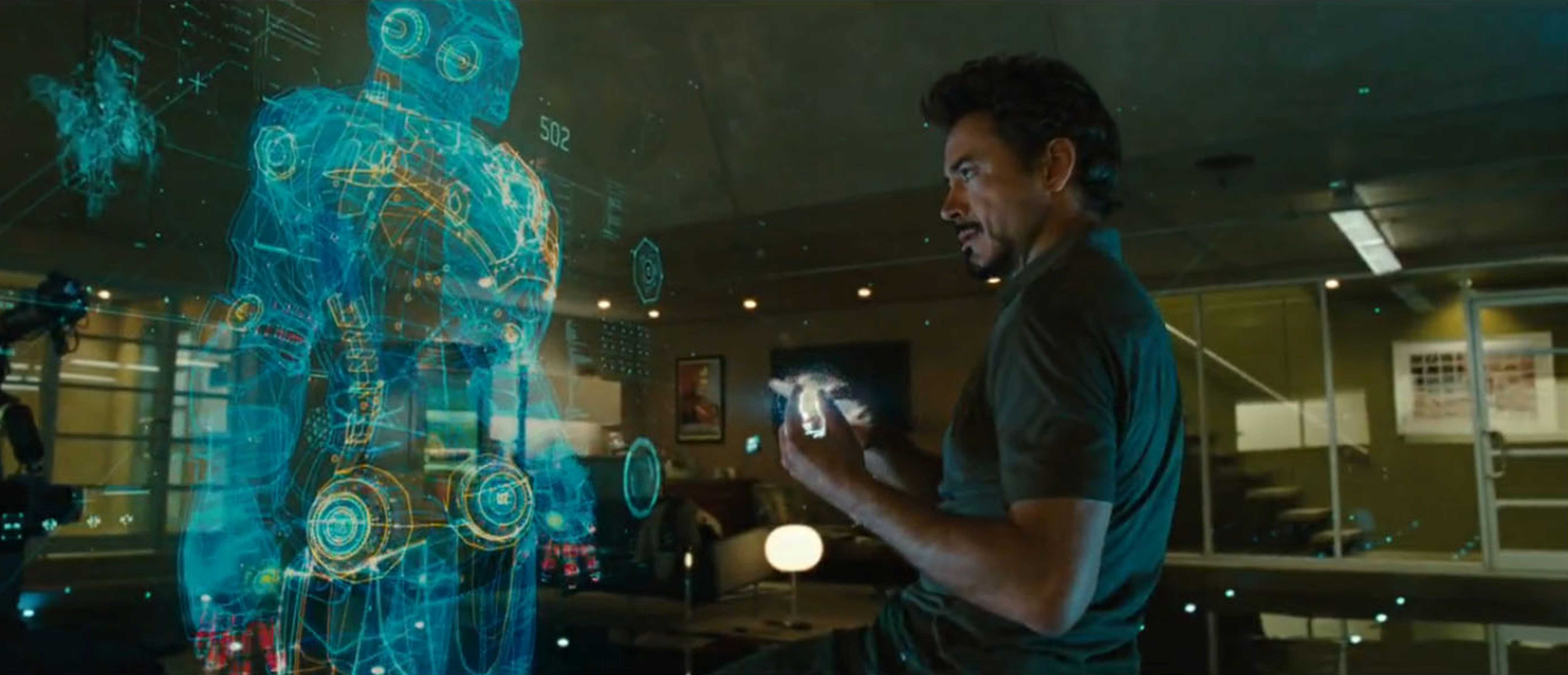

Okay, everybody watched Tony Stark in the Iron Man movies wave his hands around and yell at Jarvis to do stuff, and it looks awesome. The only problem is that waving your arms around like that for long periods is actually really tiring. Ask any actor who has to pretend to interact with these systems. It’s not practical for long work sessions.

The part that the movies got right was the inclusion of voice input. Sure, we don’t have AIs yet, but voice commands are coming along. Any interface that would otherwise require us to stand and reach across the table is going to get a lot of use out of voice commands.

People don’t even use really big touch screens if they don’t have to. Small ones, yes. Big ones only get used if they’re lying at a semi-horizontal angle for drawing purposes.

Organization of Information and Buttons

Technical diagrams and blueprints may look great in 3D. Maybe even movies, too, one day. However, plain old two-dimensional text is still one of the most efficient ways of conveying a lot of information. It’s cognitively efficient, and byte efficient. We may develop a 3D language one day, but as of now, text looks best on a 2D plane.

Look at VR. Look at the menus in apps and games. They’re all represented on 2D planes. So are most of our buttons. I mean, I don’t want to walk across the room to press a button either.

My Predictions

The mouse is still the most precise input tool we have, for now. The keyboard is still the fastest way to input text with minimal editing required. I don’t think they’re just going away. In fact, a sort of 3D mouse that turns small movements of the hand into a cursor that moves about the larger 3D interface might make more sense than stretching your arms the whole width of your projector-screen-thing to manipulate information.

Those sorts of peripherals would also get us around the problems that prevent us from taking a literally hands-on approach, such as light emitters, reflective surfaces, and so on. The actual visual part of the UI might look something like the one featured in Iron Man, but with more context for the information presented.

In the Iron Man examples, all information being presented to the user is brought to the fore by Jarvis, on request. It’s also organized with lots of (completely transparent) white space, with a noticeable lack of a window metaphor. Without Jarvis, we’ll need a more deliberate way to contextualize everything we see in a UI.

For 2D information, the window concept might very well remain in play. For information displayed in three dimensions, it might simply become a “box” metaphor, with translucent lines showing where one 3D app ends, and where the next one begins. Input will have to be contextual, with the cursor constrained to two dimensions when editing text, and freed up under other circumstances.

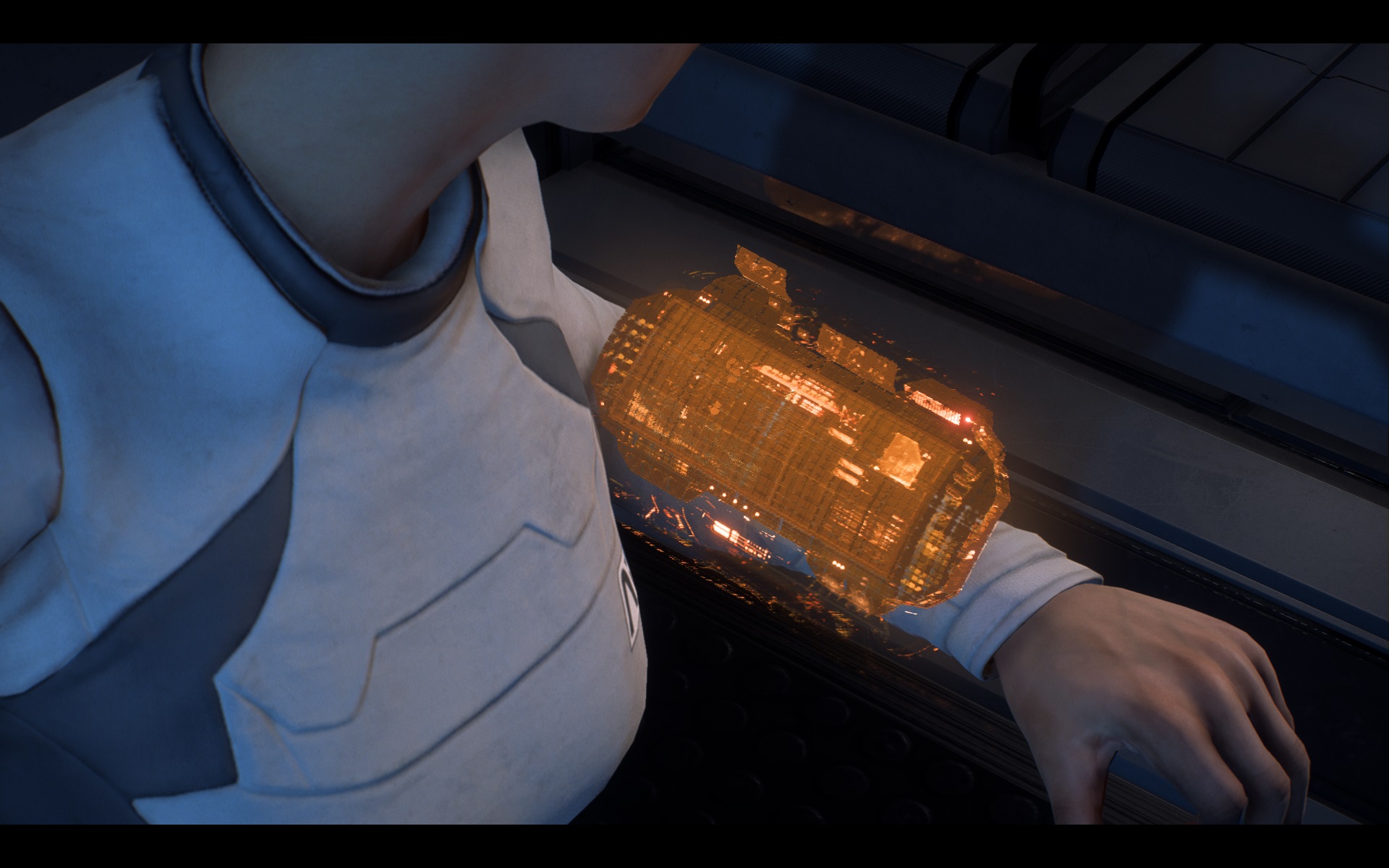

Going beyond anything possible with today’s tech, another good example of a practical holographic interface is the Omni-tool from the Mass Effect franchise. Goodness knows how they get the holograms to work when the device is implanted into you (usually in the non-dominant wrist, like a watch), but let’s ignore the science for a bit.

The Omni-tool is a purely practical device, used for communications, data analysis, and interacting with unfamiliar hardware. Oh, and stabbing people. Look, we’re ignoring the science!

The point is, it’s a practical interface because the UI is entirely contextual, and adapts to whatever it is you’re doing. It’s also operated with the fingers of one hand. No huge gestures required. Note that every other UI in the Mass Effect universe is either on a flat surface, or activated by voice.

Conclusion

Hollywood artists like to envision a world of completely unusable interfaces. Real 3D UIs will probably look more familiar than most of us seem to want to believe.

And I haven’t even touched on accessibility. How do people who can’t speak, or easily move their arms due to arthritis, interact with a huge holo-table UI? What if multiple people try to interact with it at once?

These are the kinds of questions we’ll have to answer before we embrace arm-waving as the future.

| LAST DAY: 53 Utterly Luxurious Fonts from 21 Unique Typefaces – only $17! |

from Webdesigner Depot https://www.webdesignerdepot.com/2017/09/what-3d-uis-will-probably-look-like/

No comments:

Post a Comment